I’m helping to organise the first nation-wide Agile conference in Brazil, that will take place in Porto Alegre next 22-25th June. Agile Brazil 2010 is a joint effort to bring together all the Agile communities around Brazil (industry and academy), and the conference goal is to promote communication and collaboration among its attendees aiming to disseminate the Agile culture in the whole country. Some of the confirmed international guest speakers are ThoughtWorks’ Chief Scientist Martin Fowler, Philippe Kruchten, and David Hussman.

After working the past month on building the submission system, I’m happy to announce that we’re inviting you to join as a speaker of this great event too! Tell Brazil about your experiences, present your research and share your products and learnings! You can find the deadlines and the submission guidelines at:

http://submissoes.agilebrazil.com

To find out more about the conference, please visit our website, or follow @agilebrazil on Twitter.

Agile has shown that doing prolonged analysis upfront bears little to no value to the customer. However, doing none means the project lacks the direction that it needs. The question is, how much analysis is enough before the project starts? This will be the theme of my recently accepted “Inception Workshop: Kickstarting an Agile project” that will be pair-presented with Jenny at XP2010, in Trondheim, Norway.

My journey to find the right balance between a generalist/specialist in software has led me to projects where I had to play not only the developer role, but several to varying degrees (PM, BA, QA, Architect, Coach, team lead, …) This is my attempt to share my knowledge towards those different areas, by pairing with an experienced BA and talking about Agile project initiation.

In this workshop, we will share our experience of participating in several project inceptions. Participants will work in a condensed project inception, solving a business problem and using some tools to shape the project for delivery. Our goal is also to learn from other practitioners about the tools and techniques they’re using successfully in their projects.

More details about the session can be found at the XP2010 session description:

http://xp2010.agilealliance.org/node/5380

We are very excited about this session, and we hope to see you in Norway to participate and share your experiences too!

Inspired by a post on the Lean Blog, Pat reminds us that you can’t measure everything effectively. Having written my Master’s thesis on metrics for Agile projects, I’ve learned and read about this in a lot of different places. One approach that is very known to Empirical Software Engineering researchers is the Goal-Question-Metric approach, first published by Vitor Basili et al. in the 90’s.

The GQM model suggests a hierarchical view of three levels to define which metrics to use:

- Conecptual level (goal): the motivation for measurement. Measuring things without a purpose and a thorough understanding of the problem will lead to meaningless metrics. This level imposes the hardest questions: what’s the purpose? what’s the object of measurement (your product, process, people)? what’s the motivation? who is interested in this goal? what are the quality attributes?

- Operational level (question): at this level, a set of questions are defined to try and correlate the object to the quality attributes we are interested in. These questions should help in understanding and assessing the current situation, but also in identifying ways to determine whether the goal is achieved.

- Quantitative level (metric): only then a set of metrics is associated to the questions, to try and find a quantitative way to measure and answer it. These can be objective (like code coverage), or subjetive (individual’s ranking of current code quality). Finding these metrics is not easy either.

It’s easy to try to cut corners and get into the things that are easy to measure first, specially when you can collect lots of quantitative data to work with these days. However, if you you don’t stop to think about the goals and motivations for measurement, it’s easy to forget the systemic complexity that surrounds us and look only for the easy-to-track numbers.

Lean management and problem solving is known for taking a very thorough and detailed approach in the understanding phase. To many people this is a paradigm-shift approach to management. Don’t let the numbers fool you, use them to your advantage.

It all started when I tweeted:

wish I could get hold of a chain of named_scopes without performing any query (which means it can’t be dynamically composed) #activerecord

My team has currently been performing some refactorings on our Rails app and one of our goals is to move complex find queries into more composable/meaningful/maintainable named scopes. Contrary to my expectations, when you call a named scope, Active Record performs a find(:all) query implicitly as you finish your statement:

class Shirt < ActiveRecord::Base

named_scope :red, :conditions => {:color => 'red'}

named_scope :clean, :conditions => {:clean => true}

end

>> red_proxy = Shirt.red

Shirt Load (0.3ms) SELECT * FROM "shirts" WHERE ("shirts"."color" = 'red')

+----+-------+-------------------------+-------------------------+-------+

| id | color | created_at | updated_at | clean |

+----+-------+-------------------------+-------------------------+-------+

| 5 | red | 2009-11-16 18:36:37 UTC | 2009-11-16 18:36:37 UTC | false |

| 6 | red | 2009-11-16 18:36:37 UTC | 2009-11-16 19:15:18 UTC | true |

+----+-------+-------------------------+-------------------------+-------+

2 rows in set

>> red_proxy.clean

Shirt Load (0.4ms) SELECT * FROM "shirts" WHERE (("shirts"."clean" = 't' AND "shirts"."color" = 'red'))

+----+-------+-------------------------+-------------------------+-------+

| id | color | created_at | updated_at | clean |

+----+-------+-------------------------+-------------------------+-------+

| 6 | red | 2009-11-16 18:36:37 UTC | 2009-11-16 19:15:18 UTC | true |

+----+-------+-------------------------+-------------------------+-------+

1 row in set

>> |

So, even though I can compose named scopes, I can’t defer the query to the point I define which operation to execute. I’m obviously not the first one who had this problem, but it seems like this won’t be fixed until a new solution is proposed in Rails 3.

In our particular case, we didn’t even need the proxy object, but just a hash representation of the composed query parameters. We’re using ActiveRecord::Extensions (ar-extensions) to perform some UNION queries to aggregate results, and it expects a list of hashes that represent each query. It would be nice if I could grab the hash from my named scopes without performing the query…

The documentation points to a proxy_options method that you can use to test your named scope, but it didn’t solve my problem, since it only returns the hash for the last scope in your chain:

>> Shirt.red.proxy_options

=> {:conditions=>{:color=>"red"}}

>> Shirt.red.clean.proxy_options

=> {:conditions=>{:clean=>true}} |

The solution, after digging a while through the source code, was to use an internal method that’s used during the merge algorithm, called current_scoped_methods:

>> Shirt.red.clean.current_scoped_methods[:find]

=> {:conditions=>"("shirts"."clean" = 't' AND "shirts"."color" = 'red')"} |

This is not a perfect solution, but saved me the trouble of performing the merge myself. I hope the Rails 3 solution addresses these issues and that this post saves me some time digging through ActiveRecord source code if I need this before then :)

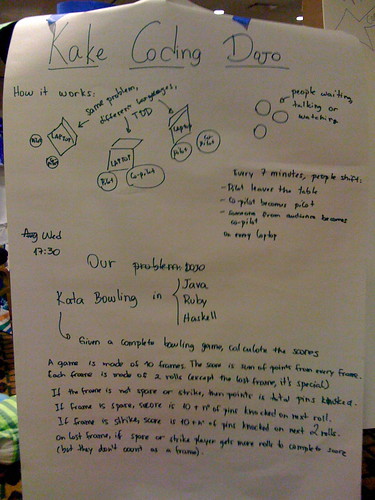

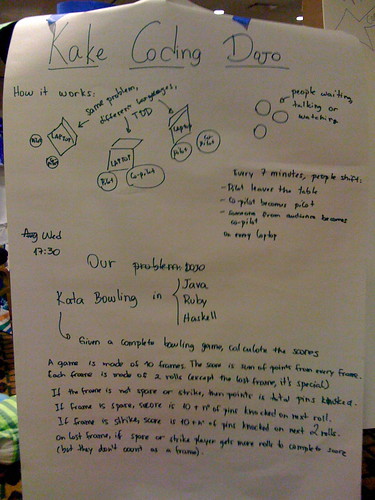

I’m here having a great time in Chicago, at Agile 2009. I will write more about the sessions in later posts, but I wanted to talk about the Coding Dojo we ran at the Open Jam. Organised by my friends from the Dojo@SP (thanks Hugo, Mari, and Thiago!), we tried a projector-less format that went really well. I wrote about the Kake Format a while ago, although the name changed.

It was a lot of fun, and we were lucky to bump into Emmanuel Gaillot around in Chicago, who made it to the session as well. We solved the Kata Bowling in three different stations: one in Ruby, one in Haskell, and one in Java. The code is available on GitHub, and these are some pictures I took during the session:

If you want to try out a dynamic format, where people have more chance to participate and train different skills, and you don’t have a projector available, I urge you to try out the Kake Format on your Dojo and share your experiences!

Continuing with the series, this time I want to highlight a very dangerous anti-pattern: using velocity as a performance metric. Before getting into the examples of how it applies to velocity, I want to first explain my view on metrics. I am in favour of metrics and coming up with interesting ways of displaying data (information visualization is a very interesting topic). However, the problem lies in the way that these metrics are used. There are two main types of metrics that I like to categorise as:

- Diagnostics Metrics: these are informative measurements that the team uses to evaluate and improve it’s own process. The purpose of collecting them is to gain insight into where to improve, and to track whether the proposed improvements are taking effect. They are not associated to a particular individual or to how much value is being produced. They’re merely informative and should have a relatively short life-cycle. As soon as the process improves, another bottleneck will be identified and the team will propose new metrics to measure and improve that area.

- Performance Metrics: these are measurements of how much value your process is delivering. These are the ones you should use to track your organisation’s performance, but they should be chosen very carefully. A good approach is to “measure up”. Value should be measured at the highest level possible, so that it doesn’t fall into one team’s (or individual’s) span of control. People tend to behave according to how they’re measured and if this metric is easy to game, it will be gamed. There should also be just a few of these metrics. An example of one such metric would be a Net Promoter Score (that measures how much your custumer is willing to recommend you to a friend) or some financial metric like Net Present Value (read Software By Numbers

if this interests you). As you can see, these are very much outside of a team’s control and to be able to score high on them, they should try and do a good job (instead of gaming the numbers).

if this interests you). As you can see, these are very much outside of a team’s control and to be able to score high on them, they should try and do a good job (instead of gaming the numbers).

Going back to velocity, a very common mistake is to use it as a performance metric instead of diagnostics. Velocity doesn’t satisfy my criteria for a good performance measure. Quite the opposite, it’s a very easy metric to game (as mentioned in my previous posts). When approached as a performance metric, it’s common to see things like:

- Comparing velocity between teams: “Why is Team A slower than Team B?” Maybe because they estimate in different scales? Maybe their iteration length is different? Maybe the team composition is different? So many factors can influence velocity that it’s only useful to compare it within the same team, and even then just to identify trends. The absolute value doesn’t mean much.

- Measuring individual velocity: as highlighted by Pat, this is a VERY DANGEROUS use of velocity, and it can actually harm your process and discourage collaboration.

- A push to always increase velocity: it’s common to have a lower velocity in the beginning of a project, and that it tends to increase after a number of iterations. Inspite of that, I’ve seen teams pushing themselves to improve it when they reach a natural limit (Who doesn’t want to go faster, right?). Velocity measures the capability of your team to deliver and, as such, tends to stabilise itself (if you have a stable process and the number is not being gamed). A Control Chart could help you visualise that. As noted by Deming, in a stable process, the way to improve is to change the process.

It’s important to remember that velocity is a by-product of your current reality (your team, your processes, your tools). You can only improve your process once it’s stable and you know it’s current capacity. Velocity is just a health-check number that will tell your team’s capability. It will not tell you about how much value is being delivered or how fast you’re going. You can deliver a lot of points and make trade-offs on quality which, no matter how you measure it, will impact your ability to go fast in the long run. As uncle Bob says:

“The way to go fast, is to go well”

So let’s stop using velocity to measure performance and look at it as a diagnostic metric to improve our software delivery process.

Continuing with the series on how to misuse velocity, the second anti-pattern I would like to highlight is when teams start making up points. Because the definition of velocity is so simple, it’s easy to game the metric to show what looks like apparent progress. If a team is being measured on velocity (more about this on later posts), it’s quite easy to just start increasing estimates: “If we just double all estimates, the relative sizes stay the same, but our velocity doubles!“. This is an extreme behaviour that would be quickly noticed as a discrepancy, but the same thing could happen in a smaller scale and pass unnoticed.

This problem can not only be originated from the team, but I’ve also seen Project Managers/Scrum Masters coming up with “clever” ways of making up points to count as velocity:

- Counting percentage or half points (as mentioned in my previous post)

- Deciding to split a story to count the partially finished work as complete, and track whatever is left in a separate story (splitting should be business-driven and not tracking-driven: it should only happen when you come up with simpler/incremental ways of delivering value in smaller chunks)

- Counting points on technical tasks. I’ve seen a team that spent a lot of effort in an iteration to make up for accumulated technical debt, and did not have a lot of time to work on new stories. The Project Manager decided to come up with a “refactoring card” and gave it a 16 to try and demonstrate how much effort was spent on such refactoring

- Counting points for in-release bug fixing. In a team, stories were deemed completed on the first iteration, but bugs started to show up in later iterations, impacting he team’s ability to deliver new functionality. Instead of allowing the decrease in velocity to demonstrate how the lack of focus on quality was impacting the team (bugs should be prevented in the first place, right?), the Project Manager decided to estimate and count points on bugs, which kept velocity apparently constant, when in fact a lot less value was being delivered

The next time you catch yourself asking “Should X count as velocity?”, stop, reflect, and ask instead “Should I worry about X happening at all?”. If you are worried about having to track or show progress on things that should be embedded parts of the process (such as activities to prevent bugs or refactoring), chances are that the problem lies somewhere else. Some of these questions might make as much sense as “Should time spent on retrospectives count as velocity?” or “Should going to the bathroom count as velocity?” :-)

I’m sure that these examples drawn from my personal experience are just a few examples of how to make up points and misuse velocity. What other similar experiences did you have in your own projects?

Dan North wrote an interesting post about the perils of estimation, questioning our approach to inceptions, release planning, and setting expectations about scope. This made me think about the implications of those factors once a project starts, and I came up with some anti-patterns on the usage of velocity to track progress. This is my first attempt at writing about them.

Before we start, it’s important to understand what velocity means. My simple definition of velocity is the total number of estimation units for the items delivered in an iteration. Estimation unit can be whatever the team chooses: ideal days, hours, pomodoros, or story points. The nature of items may vary as well: features, use cases, and user stories are common choices. Iteration is a fixed amount of time where the team will work on delivering those items. Sounds simple? Well… there’s one concept that is commonly overlooked and that’s the source of the first anti-pattern: what does delivered means?

One of the most common anti-patterns I’ve seen is not having a clear definition of done. Lean thinking tells us that nothing is really done until it’s delivering value, which in software means: code running in production and being used by real users. Although I know very few teams who can deploy code to production at the end of every iteration (some even do more than once per iteration), once a story is considered done, it could be potentially shipped, if the business decides so. There shouldn’t be a lot of extra work after that.

Another bad implication of this anti-pattern is that some teams decide to change the definition of done and count half-completed work to show progress. Some of the symptoms to help diagnose if your team is suffering from this anti-pattern are:

- The team starts tracking dev-complete stories

- “It’s done, but [we need to write the acceptance test/it’s not integrated with the other system/…]”

- “It’s done, but not done-done”

- It takes a lot of extra work to get the story deployed to production

- After finished, the story goes into the next team’s backlog

- Hearing terms like “development team velocity” or “test team velocity”

- Counting half-points or percentages because “if we don’t count it will look like we haven’t worked”

The solution? Remember that velocity is just a number that provides information for the team to understand and improve it’s process. Forget that you’re tracking it and focus on the entire Value Stream and on what’s really value-added to get things into production. Anything else is just waste. If it’s not done, it’s not done. Accept it, move on, and don’t overcomplicate, because it will only add noise and mask what could have been important information to the team.

Last week marked the 10th edition of the XP 200x conference, held in Sardinia, Italy. Me and Francisco were there to present an extended version of our Lego Lean Game. Being selected as the first session on the first day of the program, we were expecting a small audience, but it turned out to be quite well attended by about 20-25 people.

We started the session a bit delayed, due to the lack of room organisation: I was a bit shocked when we arrived and the room was arranged as a normal “lecture room”, rather than the usual group tables (that we requested a week before). Projector and flipchart were not available, so it took us about 15 minutes to have everything ready to begin.

The slow start, however, did not got in the way of the overall workshop. We have designed the activities in a flexible way that allow us to adapt their length just-in-time so we still managed to cover everything we wanted without having to rush.

The first half of the workshop was mostly the same version we presented last year in Buenos Aires, with slight modifications based on feedback we got from participants. The second half, however, was mostly new and we included an activity to allow each team to come up with their own processes (rather than following ours). This turned out to be a great success! Each group came up with different ideas and, by watching the other teams perform, we had an interesting discussion about the different approaches and results. We now think that the original version is too condensed :-)

The feedback we received after the session was great and a lot of people asked us for the material to run the session themselves. Me and Francisco have a “game package” that we can share for those interested in running the game. Get in touch with us if you’re interested! You can find more photos of our presentation here, here, and here (thanks to Hubert for sharing his pictures!). The slides are also available:

We are very interested in your feedback. So, if you were at the conference or want to use the material to run the workshop, please let us know! Share your experiences and help us make it better!

XP 2009 is happening between 25-29th of May in Sardinia, Italy and I will be there attending and presenting some interesting workshops:

- The Lean Lego Game: Me and Francisco have been improving our workshop since we last presented it at Agiles 2008 in Argentina, and we will be presenting a long version (180 minutes) on Monday, May 25th. We have just a few “seats” available to participate on the session (20-24) and it will be occupied in a first-come-first-serve basis. If more people show up we have plans to try and not reject anyone, though.

- Test Driven Development: Performing Art: Emily Bache kindly invited me to present a Prepared Kata at her workshop and I will be pairing with Francisco for 30-40 minutes, programming in Ruby with RSpec/Cucumber. Should be fun to “perform” and watch the other pairs as well. Looking forward to that session on Wednesday afternoon!

My fellow ThoughtWorker Pat Kua will be there presenting a workshop as well. I will try to brush up my (lately lazy) writting skills and publish some conference reports. And hope to see you all there in Sardinia!

Twitter

Twitter LinkedIn

LinkedIn Facebook

Facebook Flickr

Flickr